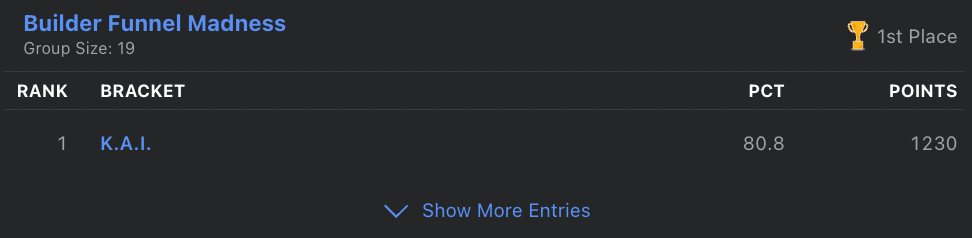

Let's talk about March Madness and how I used AI to win my office bracket pool this year! For this newsletter, I wanted to share a fun project that combines my love of sports with the power of AI tools.

The Idea

I've always loved March Madness and sports analytics, but never had the technical knowledge to build a full-on data model to predict game outcomes. With the rise of AI tools, I saw an opportunity to finally create something impressive without needing a data science degree.

My goal was simple: have the model place in the top three of my work bracket pool so I could win some money.

While I'd made some Excel sheets in the past to help with sports betting, nothing was ever too sophisticated. However, since I use AI tools daily and know how to prompt them effectively, I figured it was time to build something more powerful.

The Process

First, I needed data. I found a free Kaggle dataset with March Madness information from 2002-2024, then supplemented it with current team stats from Team Rankings.

Assuming someone else had tried this problem before, I used Perplexity to search for articles and videos with insights on predicting March Madness outcomes. This research pointed me toward key metrics: KenPom efficiency ratings, strength of schedule data, and advanced analytics like tempo.

Perplexity also suggested running thousands of simulations to account for variability. I chose to run 1,000 simulations per matchup (in retrospect, maybe I should have done 10,000).

To complete the dataset, I had Perplexity pull injury information to help the model accurately predict how injuries would affect team performance.

With all my data collected, I turned to Claude 3.7 Sonnet to build the model. I asked it to create a system that would:

- Run 1,000 simulations based on the compiled data

- Apply different weights to data points based on what research showed was most important

- Analyze historical data for similar matchups

- Provide win percentages, projected scores, team stat comparisons, and additional insights

After some back-and-forth prompting, I had a working March Madness prediction model ready to go!

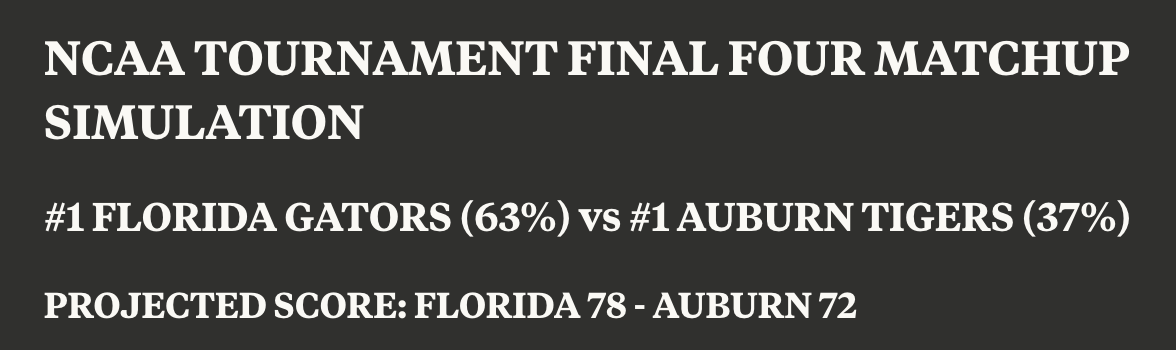

I had to show off how accurate this was for some games, off by only 2 points!!

The Results

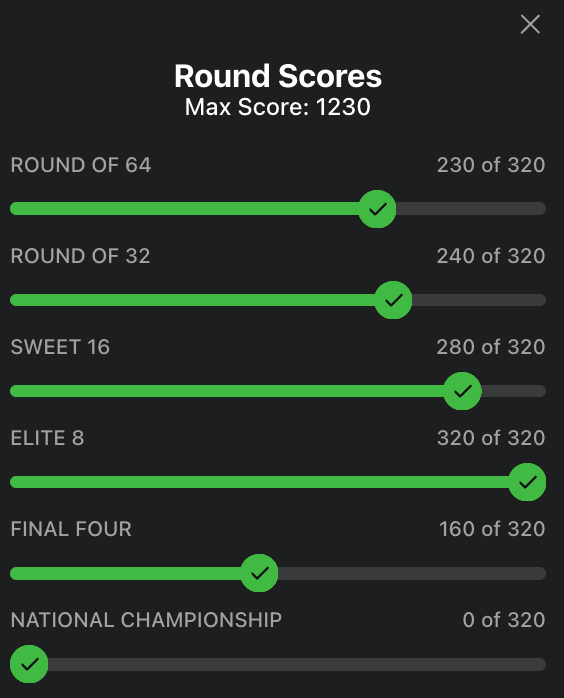

The model started hot, going 2-for-2 in the First Four games. But then it quickly fell apart in the opening rounds. I thought the dream was over, that I'd spent hours building something that couldn't beat the inherent randomness of March Madness.

But once we hit the Sweet Sixteen, the model started to cook.

In the model's defense, the first round had many coin-flip matchups where it just slightly chose wrong (perhaps where those 10,000 simulations would have helped). But once we hit the Sweet 16, it became remarkably accurate.

When initially reviewing the simulations, I was skeptical because the model seemed way too biased toward higher seeds. It picked all four #1 seeds to make the Final Four, which felt too simple. Did it really do any analysis? That's what your coworker who doesn't watch sports would do, and it's not a bad strategy, but it's extremely rare, having happened only once since 1979 (in 2008).

But then... it actually happened.

The model loved the #1 seeds, and after watching the tournament, they did dominate most of their games (though everyone except Duke had some close calls). Having a perfect Final Four prediction was incredible and pretty much guaranteed my top 3 placement in the work pool, mission accomplished!

Everything else was just gravy.

The model picked Duke to win the championship, and they were well on their way before Houston's amazing comeback victory.

However, in the end the model pulled through and won the entire work pool!

What Worked Well

- The bottom line: We won the work pool which was the best-case scenario.

- The predictions: Strong Sweet 16, perfect Elite 8

- The analysis quality: Each prediction came with thorough supporting data and reasoning. All the picks made sense, and the model even explained potential weaknesses in its own predictions. See an example here.

- The development process: From idea to winning model in just a couple of hours. AI truly helped take this concept from zero to hero in record time.

What Didn't Work So Well (and Plans for Next Year)

- Early round accuracy: The first two rounds weren't the best. For next year, we need to find a way to better predict those coin-flip matchups in the early stages of the tournament.

- Score projections: While some projected scores were spot on (like the Florida-Auburn game), others were off. The point differentials seemed to follow similar patterns regardless of matchup. For next year, I'd like to refine this aspect to potentially nail betting spreads.

Conclusion

Version 1 of my AI-powered March Madness model exceeded expectations! We took an idea from zero to hero, won the work pool, and I gained valuable experience using AI tools for prediction modeling. While it didn't correctly predict the champion, I can't complain about the results.

I'm already looking forward to Version 2 for next year's tournament. With some tweaks to improve early-round predictions and score projections, who knows how accurate it could become!

And no, I don't want to hear anyone say I got lucky because the top seeds won. I'll be living off this high until next March.

How AI Won My March Madness Bracket Pool

Learn how I built an AI prediction model that perfectly called the Final Four and won my office March Madness bracket pool.